How to Move a Site to a Different Domain without Downtime or 404s

Learn how Ansible and nginx helped me move a bunch of content to this domain without breaking anything for the most part.

Last week I talked about the lessons learned from creating content on 2 sites instead of 1 and today I’d like to go into how I technically made the move.

Ansible, nginx and a little bit of preparation let me confidently make the move in ~20 seconds.

My requirements for the move were pretty strict but reasonable:

- Semi-automated solution to eliminate human error

- No downtime on either sites

- All old URLs had to continue to work

- Carry over Disqus comments

- Carry over social share counts

I failed on the last 2 points because of external circumstances that I could not control but overall it was a successful move and I learned a few lessons.

# 10,000 Foot Overview of How Both Sites Were Set Up

They are both Jekyll blogs that are being served with nginx. I also initially set up the server and deploy updates to it using Ansible (it’s a configuration management tool).

I don’t use Docker here because it’s just a static site, and in this case using Docker makes things more complicated than just installing nginx directly on the server.

The tactics explained below will work for any site or web application.

Creating a Work Flow for How Things Will Go Down

Even with experience, I still resort to the basics for nearly every problem, and that’s to break down 1 vague problem into smaller well defined problems.

The vague problem here is “move stuff from 1 domain to another without breaking anything”.

I started with the low hanging fruit, and that’s making sure the old URLs still work.

# Redirecting URLs With nginx

The first thing I did was go into my Ansible configuration for custom nginx rules related to the diveintodocker.com domain.

Then, I added this nginx rule:

location ~ ^/blog/(.*)$ {

return 301 https://nickjanetakis.com/blog/$1$is_args$args;

}

My URL structure was the same. Both domains use /blog/hello-world after the

domain, so all I had to do was match on /blog/ and capture any additional

URL values after /blog/.

Then, perform a permanent (301) redirect to the new domain name and carry over

any additional URL values. That’s what the $1 does. It evaluates to the result

of (.*) in the location part. This would be the name / URL of the blog post

(example: hello-world).

The $is_args$args carries over any query string values. That’s the ? in the

URL followed by any parameters. This is really important to include, because

you might have UTM campaigns or other meta-data in your URLs that you wouldn’t

want to lose in the redirect.

The Blog Redirects Are Done, What’s Next?

Previously, my course had its own URL space because my intention was to have multiple courses on that domain, but now it just redirects to the home page.

location ~ ^/courses/dive-into-docker$ {

return 301 /$is_args$args;

}

That means if you goto diveintodocker.com/courses/dive-into-docker it will redirect you to diveintodocker.com. It’s similar to the other redirect, except it doesn’t capture anything since it’s a direct URL which has no variable URL values (like a blog post title).

Here’s a few other redirects, try to guess what they do:

location ~ ^/courses/$ {

return 301 https://nickjanetakis.com/courses/$is_args$args;

}

location ~ ^/about$ {

return 301 https://nickjanetakis.com/about$is_args$args;

}

location ~ ^/updates$ {

return 301 https://nickjanetakis.com/newsletter$is_args$args;

}

So that takes care of all of the redirects. All of those rules were placed into my Ansible configuration, but were not ran. This was a preparation step because I wasn’t ready to deploy both new versions of the site yet.

# Preparing a Couple of Terminal Windows

I mentioned a semi-automated solution because at the end of the day, I need to modify multiple sites at once, which means I need to deploy a new version of both the Dive Into Docker and this website along with re-deploying nginx.

At this point I had rigorously tested both new sites in development, so I was 100% sure they would be good to go in production (famous last words). All I had to do was make them live.

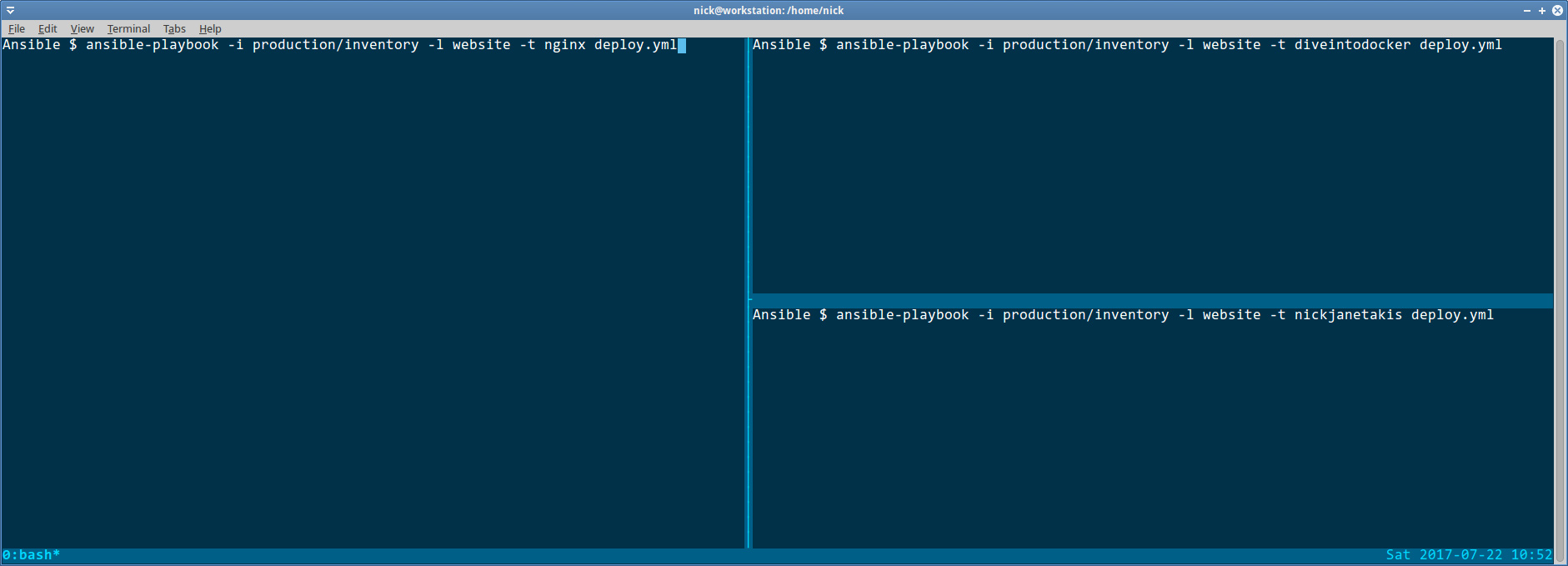

So I used tmux and split a terminal in thirds to prepare for all of the deploy commands.

3 tmux panels in 1 terminal window:

Click the image to zoom in, but the commands themselves aren’t that important.

# Deploying Everything With No Downtime

At this point I had to deploy both websites, followed by nginx. They are separate tasks.

Deploying both sites could be ran in parallel. It takes about 7 seconds each,

and a majority of the time is building the site locally with Jekyll before

it gets sent over to the server using rsync.

So I ran both of those together and it was a success. At this point both new versions of the site were running and there was no downtime.

nginx will automatically serve the new content without a restart, which is why there was no downtime. I did redesign this site, so there was a 3 second window where the CSS looked crazy (old styles being applied to the new markup) but that was acceptable to me.

I could have gotten around that by simply deploying the new version of the site

into a different folder on the server, and then manually perform a mv operation

to move it to the proper folder once it was fully transferred, but I didn’t deem

that to be worth the effort.

Next up was nginx, and that was painless. It took about 10 seconds for Ansible to do its thing, and during that time there was no downtime. Just kidding, there was about 50ms of downtime at the end when nginx needed to be restarted due to the config changes. To me, that’s essentially the same as no downtime for this use case. I’m not at Google scale.

At this point all of the redirects worked and the new versions of both sites were live, yay! Now I just needed to figure out how to migrate my old comments and social shares.

# Losing Disqus Comments and Not Getting Support

Disqus has a number of comment migration tools that make switching domain names easy, but none of them work when you have 2 sites where each site has its own short name (even if it’s under the same account).

I even posted about it on Disqus’ official support forum but no one replied. Honestly this makes me really question on whether or not I want to use Disqus in the future. I really don’t like how there’s no way to contact support directly and I lost a lot of comments due to this.

If anyone knows of a solution, I’d really appreciate it if you left a comment (irony anyone?). A lot of great people made valuable comments and it would be a shame to lose them forever.

To prevent this in the future, consider using the same short name for multiple sites (but that conceptually seems wrong to me).

# Losing Social Share Counts but Not Caring Enough

I’m not obsessed about social sharing but I do think it adds a nice touch to a page because it lets visitors quickly share a link if they want to. For that I use a service called sumo.com.

Edit: I no longer use Sumo. I just have plain old share buttons but I’m leaving the original article’s content in place just in case you’re using a social service that keeps the share count.

Prior to moving the 27 Docker related articles over I had a number of articles with over 1,000 social shares. All in all I had about 6,000 shares total for all articles combined.

sumo.com and a lot of other sharing sites grab their share counts directly from Open Graph. This protocol was created by Facebook but it’s used for a number of things.

The problem is, when you set up Open Graph tags (they are meta tags), they are

associated to a specific URL. For example, here’s the og:url tag for this post:

<meta property="og:url" content="https://nickjanetakis.com/blog/how-to-move-a-site-to-a-different-domain-without-downtime-or-404s">

That means all of the Docker articles I moved over were associated to the diveintodocker.com domain on Facebook’s side of things through Open Graph.

Facebook’s Open Graph Doesn’t Understand 301 Redirects

Basically you need to keep your URLs up and have them respond with a 200. That means you would need to configure your web server to omit Facebook’s crawler from your 301s.

This way every other crawler / visitor will get the redirect, but Facebook will still see a 200.

It’s not that it’s a technically difficult task to perform, but it’s just a burden I didn’t feel like maintaining. My outlook is that while I will lose the old share counts, I’ll hopefully gain new shares since the old URLs still work.

In either case, it’s worth knowing about this scenario if you decide to change URLs.

HTTP and HTTPS Are Considered Different URLs

It’s also worth mentioning that going from http to https counts as a

different URL, so you might want to make sure you launch with https from the start.

If you want to learn how, I just

launched a course that goes over how to do it with Let’s Encrypt.